AI Won’t Replace You—Until Your Boss Makes Them Your KPI

We said AI would augment us. Now it’s a checkbox to prove we’re still useful.

Two weeks ago, Shopify’s CEO Tobi Lütke sent a memo to staff. It wasn’t meant to be public—but it leaked. In it, he told employees that “reflexive AI usage is now a baseline expectation.” Before any team asks for more budget or more people, they need to prove that AI can’t do the job. AI proficiency will now be part of performance reviews.

On the surface, it sounds bold. Progressive, even.

But read it again. That’s not innovation. That’s a policy built around cost-cutting, not creativity.

And that’s the problem.

I believe in AI. I think it’s one of the most important and exciting tools we’ve ever created. I use it every day. This article was drafted with its help. But I use it on my terms—as a supplement to thinking, not a replacement for it.

That’s the line companies are starting to erase.

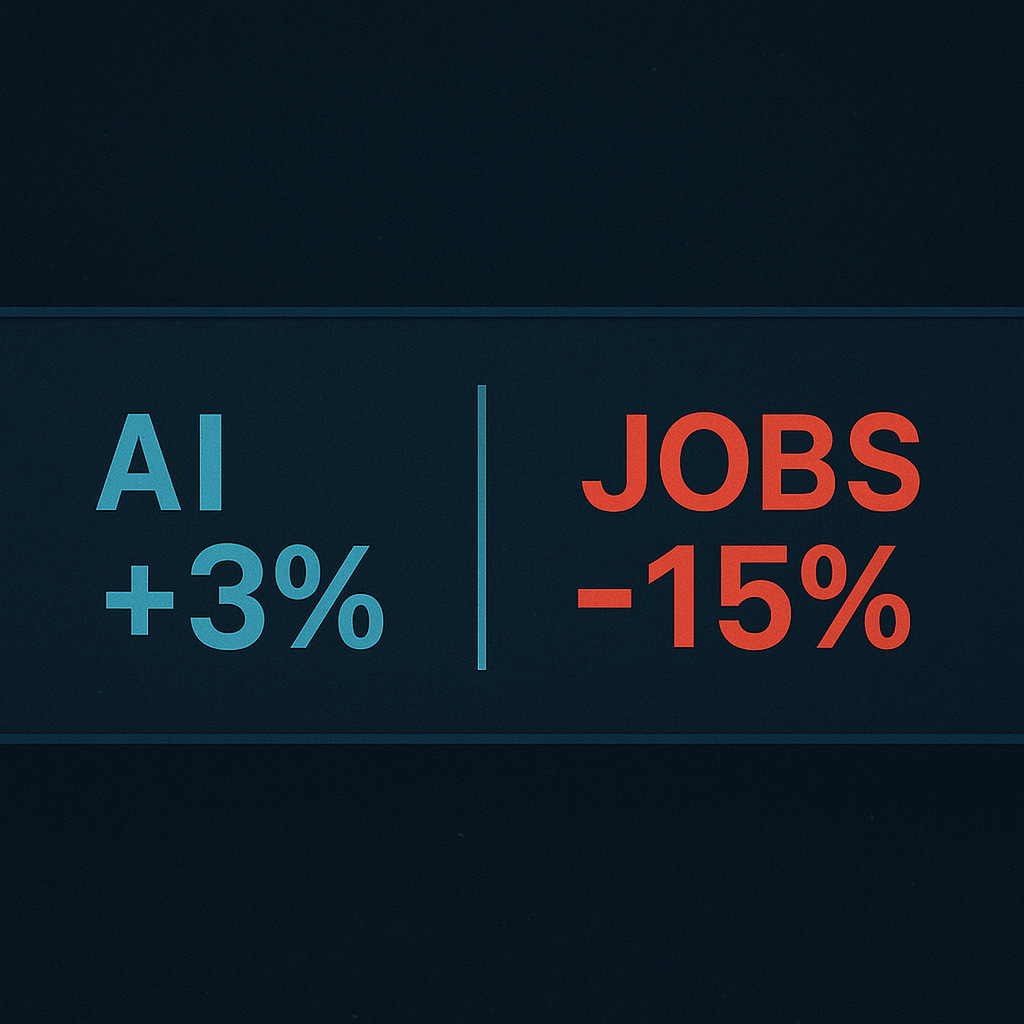

What we’re seeing across the industry isn’t thoughtful integration. It’s a scramble to automate—fast. Not to empower people, but to prove fewer of them are needed. Not to make the work better, but to make it cheaper.

It’s AI as a blunt instrument, not a breakthrough.

Don’t believe me?

Shopify isn’t alone. According to Forbes, more and more companies are making AI usage a baseline expectation. This isn’t just about testing new tools—it’s a restructuring of work culture itself, where being “AI-proficient” becomes part of how you're evaluated, regardless of your role.

Cognition’s Devin, marketed as the first “AI software engineer,” can plan, code, debug, and ship. But the narrative around it isn’t about helping developers. It’s about replacing them. Artisan AI’s pitch is even more direct: their “digital workers” are sales reps, marketers, and assistants—autonomous, and designed to reduce headcount. Ava, their AI SDR, doesn’t assist a human. She is the team.

Klarna replaced 2,000 employees with AI-powered support. The IRS has quietly let go of thousands of staff, citing “efficiency gains through automation.” Talk about government efficiency. How many mouths lost food for that metric? Even public institutions are now optimizing away the very people they were built to serve.

And in warehouses, Agility Robotics’ Digit is rolling out beside human workers. But let’s be honest: no one’s investing billions in humanoid robots because they love collaboration. They want headcount without the people.

The message is everywhere: if AI can do it, you’d better justify why you’re still here.

When the Default Is the Machine

There’s a big difference between encouraging people to explore AI and mandating that they justify their existence against it.

The former builds capability. The latter builds fear.

When you treat AI as the default, humans stop trying to think beyond it.

I know I do. It’s a struggle.

Once the tool is there—fast, confident, fluent—it’s hard not to lean on it. Hard not to let it take the first pass. Then the second. Then the direction entirely. You start trusting it more than your own instincts. Not because it’s better—but because it’s easier.

And when that becomes the norm—when “reflexive use” is no longer a behavior but a requirement—we’re not augmenting people. We’re narrowing them.

It’s easy to say you’re being efficient. But efficiency is not the same as innovation. Especially when it comes at the cost of risk, play, or dissent.

You don’t get new ideas by optimizing the ones you already had. You get them by thinking past the obvious.

And right now, we’re training people not to.

Short-Term Thinking, Long-Term Cost

I understand the temptation. AI looks like savings. It looks like leverage. It fits cleanly on a slide in a quarterly review.

But if your model for the future is “replace people with prediction engines,” you’re not building the future. You’re slowly phasing it out.

This isn’t theoretical. It’s already happening. Layoffs come first. Then the press release about the company’s “AI pivot.” LLM usage becomes “table stakes.” And the people who built the company now have to defend their jobs from a tool they didn’t ask for.

AI can absolutely make us better. But only if we still trust humans to think. To build. To question. To care.

Take that away, and you’re not left with a smarter company. You’re left with a quieter one.

But here’s the real question no one seems to be asking:

When everyone’s been replaced by AI—when the coders are gone, the SDRs replaced, the warehouse teams automated, the analysts prompted into silence—who exactly is going to be able to afford all these products?

Liked this piece? Read the companion post:

LLMs Are Our Sophons

·(This is version my original post on titled LLMs: The Three-Body Lock-in, but this is a reflection of how I think and write, and the other, the “more professional”).

This is such a sharp, necessary piece. It captures something I’ve been exploring in my writing: the shift from AI as a tool, to the point that it's expected to be used in your work, and how that's reshaping people's behaviours. When using AI becomes a KPI, it creates unintended consequences and reshapes how people are expected to work.

Thank you, that really means a lot. I’ve been feeling that same shift too, not just as someone writing about it, but as someone working inside it.